Systematic Review Literature Search

People involved:

About this project

This project seeks to develop methods and tools for improving how experts search for literature for systematic reviews. So far, research in this area by this team has provided useful tools for visually assisting Boolean query formulation, fully automatic methods for Boolean query refinement specifically in the systematic review domain, domain-specific retrieval models, and a test collection.

See below for a list of relevant publications, tools, description of the task, background information, and challenges.

In this page

Relevant Publications

Automatic Query Transformation

- Harry Scells and Guido Zuccon. 2018. Generating Better Queries for Systematic Reviews. In The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval.

- Harry Scells and Guido Zuccon and Bevan Koopman. 2019. Automatic Boolean Query Refinement for Systematic Review Literature Search. In Proceedings of The Web Conference 2019.

- Harry Scells and Guido Zuccon and Mohamed A. Sharaf and Bevan Koopman. 2020. Sampling Query Variations for Learning to Rank to Improve Automatic Boolean Query Generation in Systematic Reviews. In Proceedings of The Web Conference 2020.

- Harry Scells and Leif Azzopardi and Guido Zuccon and Bevan Koopman. 2018. Query Variation Performance Prediction for Systematic Reviews. In The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval.

- Shuai Wang and Hang Li and Harry Scells and Daniel Locke and Guido Zuccon. 2021. MeSH Term Suggestion for Systematic Review Literature Search. In Australasian Document Computing Symposium (ADCS 2021).

- Shuai Wang and Harry Scells and Bevan Koopman and Guido Zuccon. 2022. Automated MeSH Term Suggestion for Effective Query Formulation in Systematic Reviews Literature Search. In Intelligent Systems with Applications (ISWA) Technology-Assisted Review Systems Special Issue.

Query Formulation

- Harry Scells and Guido Zuccon and Bevan Koopman and Justin Clark. 2020. A Computational Approach for Objectively Derived Systematic Review Search Strategies. In 42nd European Conference on IR Research.

- Harry Scells and Guido Zuccon and Bevan Koopman and Justin Clark. 2020. Automatic Boolean Query Formulation for Systematic Review Literature Search. In Proceedings of The Web Conference 2020.

- Harry Scells and Guido Zuccon and Bevan Koopman. 2020. A Comparison of Automatic Boolean Query Formulation for Systematic Reviews. In Information Retrieval Journal.

- Shuai Wang and Harry Scells and Bevan Koopman and Guido Zuccon. 2023. Can ChatGPT Write a Good Boolean Query for Systematic Review Literature Search?. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2023).

Tools for Improving Literature Search

- Harry Scells and Guido Zuccon. 2018. searchrefiner: A Query Visualisation and Understanding Tool for Systematic Reviews. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management.

- Harry Scells and Daniel Locke and Guido Zuccon. 2018. An Information Retrieval Experiment Framework for Domain Specific Applications. In The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval.

- Harry Scells and Guido Zuccon and Bevan Koopman and Justin Clark. 2019. Visualising Systematic Review Search Strategies to Assist Information Specialists. In Proceedings of the Cochrane Colloquium 2019.

- Harry Scells and Guido Zuccon and Bevan Koopman and Justin Clark. 2019. Automatic Search Strategy Reformulation Interface for Systematic Reviews. In Proceedings of the Cochrane Colloquium 2019.

- Hang Li and Harry Scells and Guido Zuccon. 2020. Systematic Review Automation Tools for End-to-End Query Formulation. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR '20).

- Shuai Wang and Hang Li and Guido Zuccon. 2023. MeSH Suggester: A Library and System for MeSH Term Suggestion for Systematic Review Boolean Query Construction. In The 16th ACM International Conference on Web Search and Data Mining.

- Xinyu Mao and Teerapong Leelanupab (Kim) and Harry Scells and Guido Zuccon. 2025. DenseReviewer: A Screening Prioritisation Tool for Systematic Review based on Dense Retrieval. In Proceedings of the 47th European Conference on Information Retrieval (ECIR 2025).

Test Collections

- Harry Scells and Guido Zuccon and Bevan Koopman and Anthony Deacon and Shlomo Geva and Leif Azzopardi. 2017. A Test Collection for Evaluating Retrieval of Studies for Inclusion in Systematic Reviews. In Proceedings of the 40th annual international ACM SIGIR conference on Research and development in Information Retrieval.

- Shuai Wang and Harry Scells and Justin Clark and Guido Zuccon and Bevan Koopman. 2022. From Little Things Big Things Grow: A Collection with Seed Studies for Medical Systematic Review Literature Search. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2022).

Retrieval Models

- Harry Scells and Guido Zuccon and Bevan Koopman and Anthony Deacon and Leif Azzopardi and Shlomo Geva. 2017. Integrating the framing of clinical questions via PICO into the retrieval of medical literature for systematic reviews. In Proceedings of the 26th ACM CIKM conference on Information and Knowledge Management.

- Harry Scells and Guido Zuccon and Bevan Koopman. 2020. You Can Teach an Old Dog New Tricks - Rank Fusion applied to Coordination Level Matching for Ranking in Systematic Reviews. In 42nd European Conference on IR Research.

- Shuai Wang and Harry Scells and Ahmed Mourad and Guido Zuccon. 2022. SDR for Systematic Reviews: A Reproducibility Study. In Proceedings of the 44th European Conference on Information Retrieval (ECIR 2022).

- Shuai Wang and Harry Scells and Bevan Koopman and Guido Zuccon. 2022. Neural Rankers for Effective Screening Prioritization in Medical Systematic Review Literature Search. In Australasian Document Computing Symposium (ADCS 2022).

- Shuai Wang and Harry Scells and Shengyao Zhuang and Bevan Koopman and Guido Zuccon. 2024. Zero-shot generative large language models for systematic review screening automation. In Proceedings of the 46th European Conference on Information Retrieval (ECIR 2024).

- Shuai Wang and Harry Scells and Bevan Koopman and Martin Potthast and Guido Zuccon. 2024. Generating Natural Language Queries for More Effective Systematic Review Screening Prioritisation. In In Proceedings of the Annual International ACM SIGIR Conference on Research and Development in Information Retrieval in the Asia Pacific Region (SIGIR-AP 2024).

- Xinyu Mao and Bevan Koopman and Guido Zuccon. 2024. A Reproducibility Study of Goldilocks: Just-Right Tuning of BERT for TAR. In Proceedings of the 46th European Conference on Information Retrieval (ECIR 2024).

- Xinyu Mao and Shengyao Zhuang and Bevan Koopman and Guido Zuccon. 2024. Dense Retrieval with Continuous Explicit Feedback for Systematic Review Screening Prioritisation. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2024).

What are systematic reviews?

Systematic reviews are a type of literature review, synthesising all possible relevant information for highly focused research questions. In the medical domain, systematic reviews are the foundation of evidence based medicine and are of the highest quality evidence for this domain. Systematic reviews in the medical domain not only inform health care practitioners about what decisions to make about diagnosis and treatment, but have also been used to inform governmental policy making. The main type of systematic review seeks to systematically search and critically appraise and synthesise evidence from clinical studies (i.e., randomised controlled trials). There are, however, also a number of other types of systematic reviews, such rapid reviews (where time is a more important factor), scoping reviews (which synthesise a range of many broad areas of literature), or umbrella reviews (which could be though of as systematic reviews of systematic reviews).

Cost factors

There are a number of considerations to make when conducting a systematic review. Most importantly are the time and cost factors involved. A systematic review has a number of steps which must be completed in a systematic nature. These steps are usually defined well in advance and strictly adhered to during the construction of the review. At a high level, these steps are as follows:

- Identification of research question.

- Construction of study protocol.

- Formulation of search strategy.

- Screening and Appraisal of studies.

- Synthesis of studies.

- Publication and distribution of review.

The main cost of a systematic review appears in step 4, where studies are screened and appraised to determine their inclusion or exclusion criteria for the following step. Often, a search strategy retrieves thousands, if not tens of thousands of results. The systematic nature of these reviews calls for inspecting each and every result retrieved. It is also common for this screening and appraisal to be performed in parallel with multiple reviewers to reduce bias (increasing the cost further). This screening process can often take months, and sometimes a year or more (depending on how many results are retrieved).

Reducing cost factors

There has been much research developing tools to assist researchers undertaking a systematic review to reduce the monetary and time costs of the review. Typically these tools help by assisting to prepare and maintain reviews, re-ranking results through active learning, automating evidence synthesis, among others. There has also been much research to develop methods for automatically prioritising the results in the screening and appraisal phase. Systematic review literature search is unlike typical web search (e.g., Google) as a Boolean retrieval model is used. Most research on ranking in the Information Retrieval domain has focused primarily on this “ad-hoc” task of ranking documents for a query similar to one that would be issued to a modern search engine. Ranking the results of a Boolean query cannot be performed with the same principals, and there has been many studies showing the ineffectiveness of Boolean queries vs. the types of queries used in modern search engines. The screening prioritisation for systematic review literature search therefore uses approaches such as active learning, rather than improving the retrieval model.

Why bother with Boolean search?

A Boolean query allows for the complete control over the search results. While it does not provide a mechanism for ranking, the trade-off in specifying exactly what should be retrieved through the use of set-based operators, term matching, and field restrictions allows for expert control. Furthermore, many search engines used for systematic review literature search (e.g., PubMed) also incorporate medical ontologies (i.e., MeSH), explicit stemming, and complex Boolean operators such as adjacency. These features of the types of Boolean queries used in this domain are also the reason the cost of a review are so high: the complexity involved in constructing a Boolean query to effectively satisfy the information need of the review is extremely high.

Improving Boolean query formulation

There are significant time and cost savings to be had by improving the effectiveness of Boolean queries. A more effective Boolean query retrieves less irrelevant studies while maintaining the number of relevant studies. Screening prioritisation only helps to bubble the most relevant studies to the top of the list; reviewers still must screen all studies systematically. A more effective query translates to less studies to screen overall. Even small decreases in numbers of irrelevant studies can significantly reduce cost and time factors of systematic review construction. Decreases in the time it takes to construct systematic reviews can lead to more accurate and up-to-date evidence based medicine; improving decisions by health care professionals.

Our Tools

We have developed a number of tools to assist with the construction of systematic reviews.

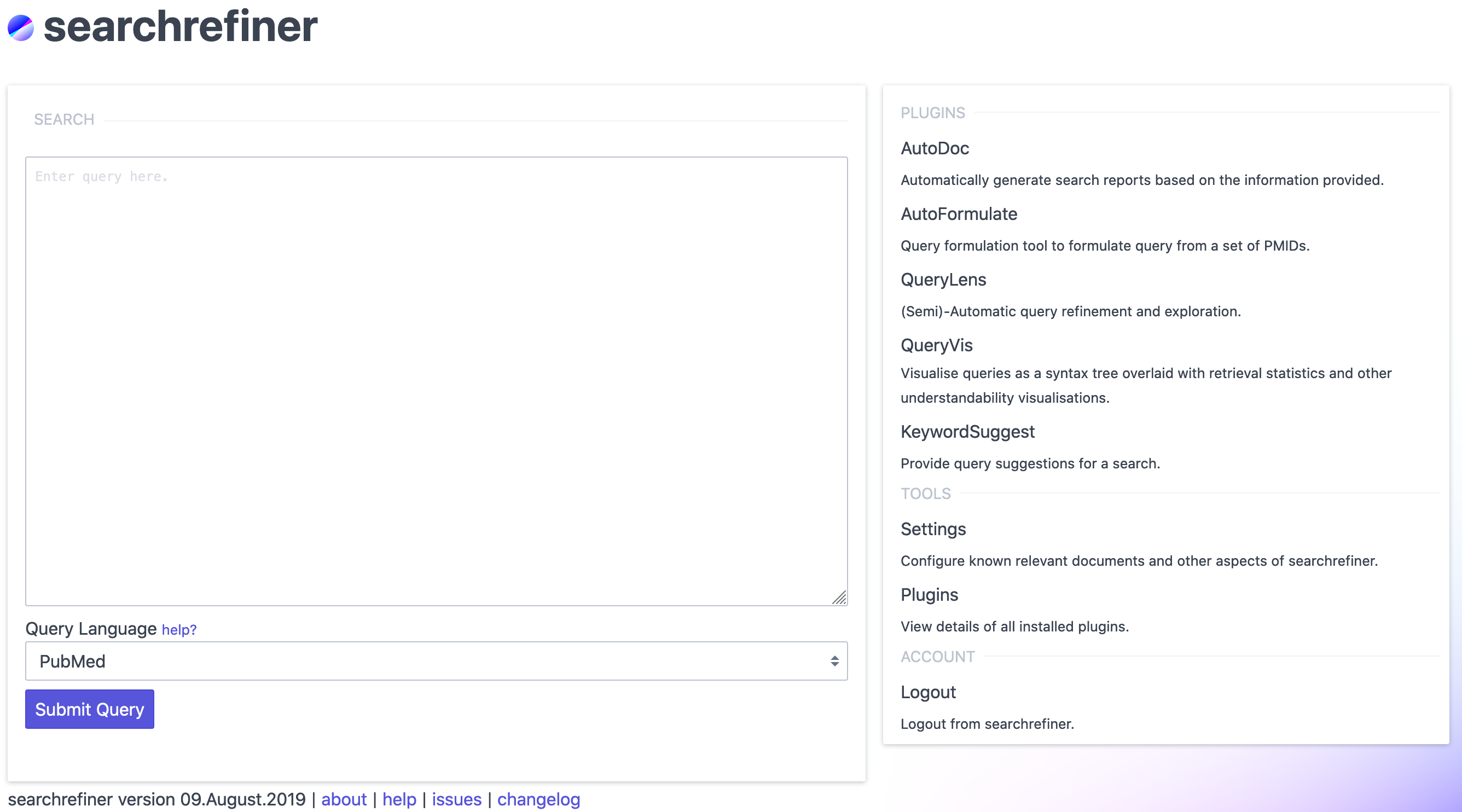

searchrefiner

searchrefiner is an interactive interface for visualising and understanding queries used to retrieve medical literature for systematic reviews. searchrefiner is an open source project; the source is made available on GitHub. It is currently in development, however you may preview the interface at this demo link (note that users must be approved prior to use).

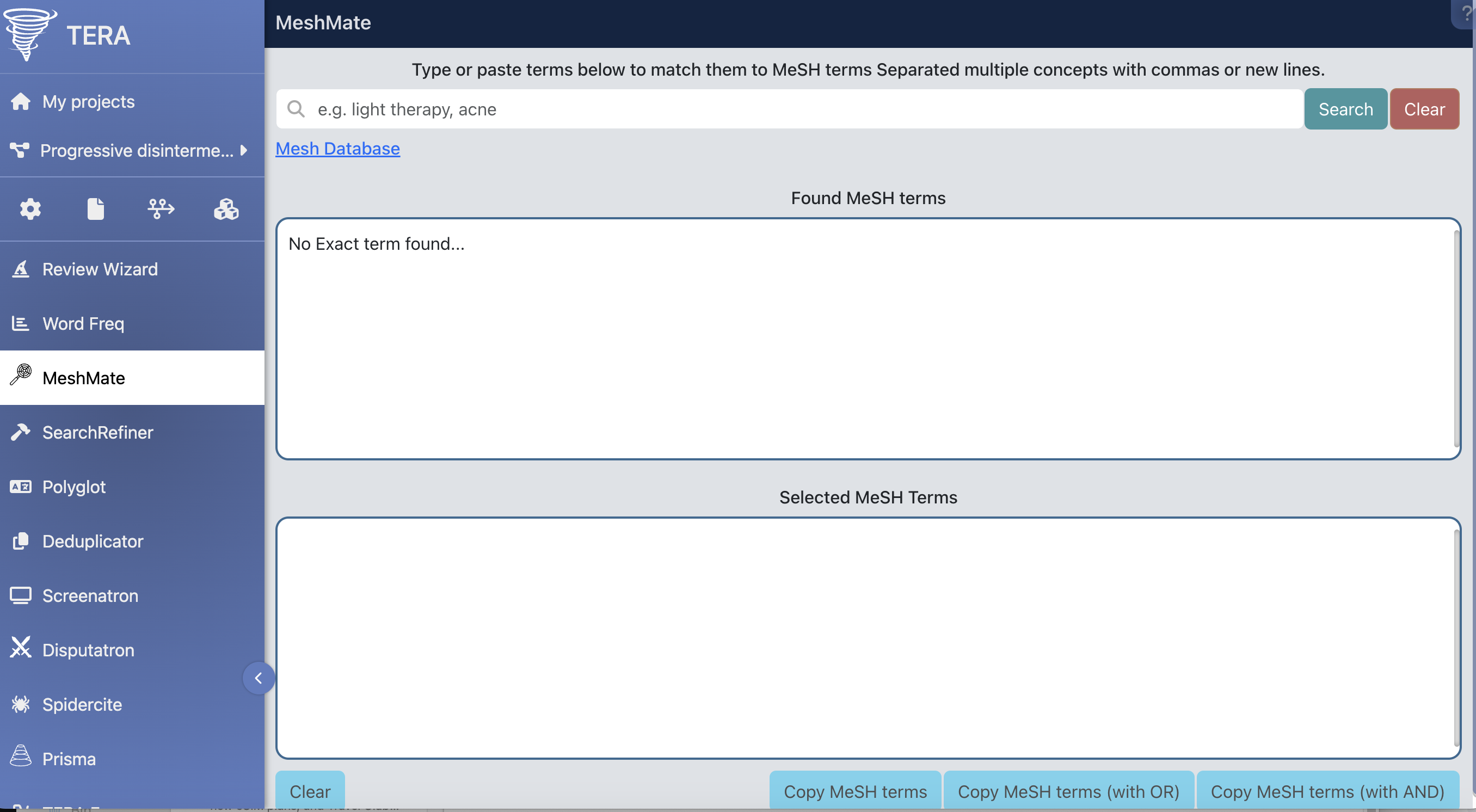

MeSHSuggester (MeshMate)

MeSHMate (MeshSuggester) is a Web-based MeSH term suggestion prototype system integrated in tera tools that allows users to obtain suggestions from a number of underlying methods, including BERT-based neural suggestion methods, suggestion can be conducted using Atomic-BERT, Semantic-BERT, and Fragment-BERT. You can create an account first at tera-tools and then access the MeSHMate tool.

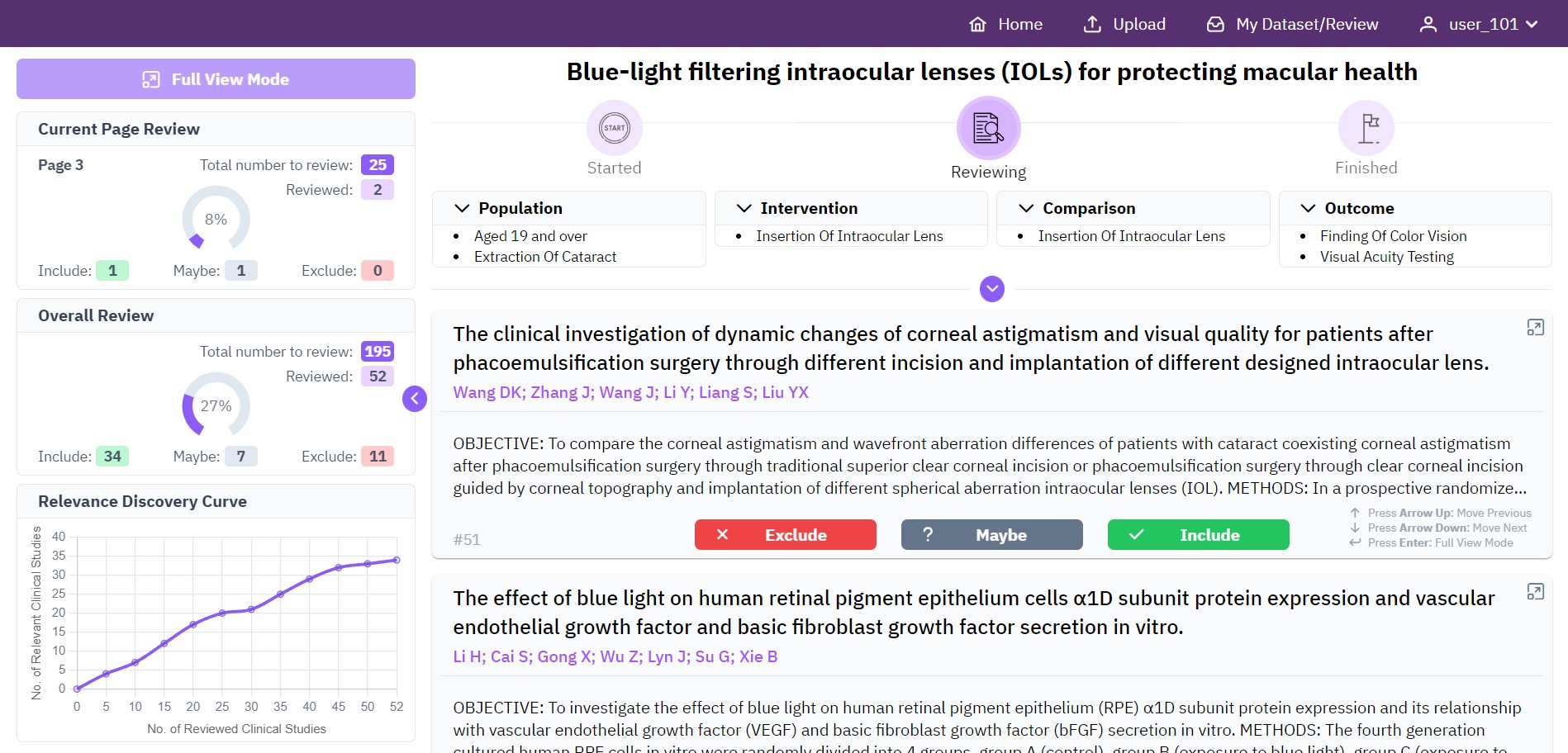

DenseReviewer

DenseReviewer is a screening prioritization tool for systematic reviews, leveraging dense retrieval and relevance feedback to rank studies efficiently during title and abstract screening. It dynamically updates rankings based on user assessments, optimizing the screening process. The tool includes a web-based interface for interactive screening and a Python library for integration and experimentation. It supports structured PICO queries, allows self-hosting via Docker, and improves efficiency in identifying relevant studies.

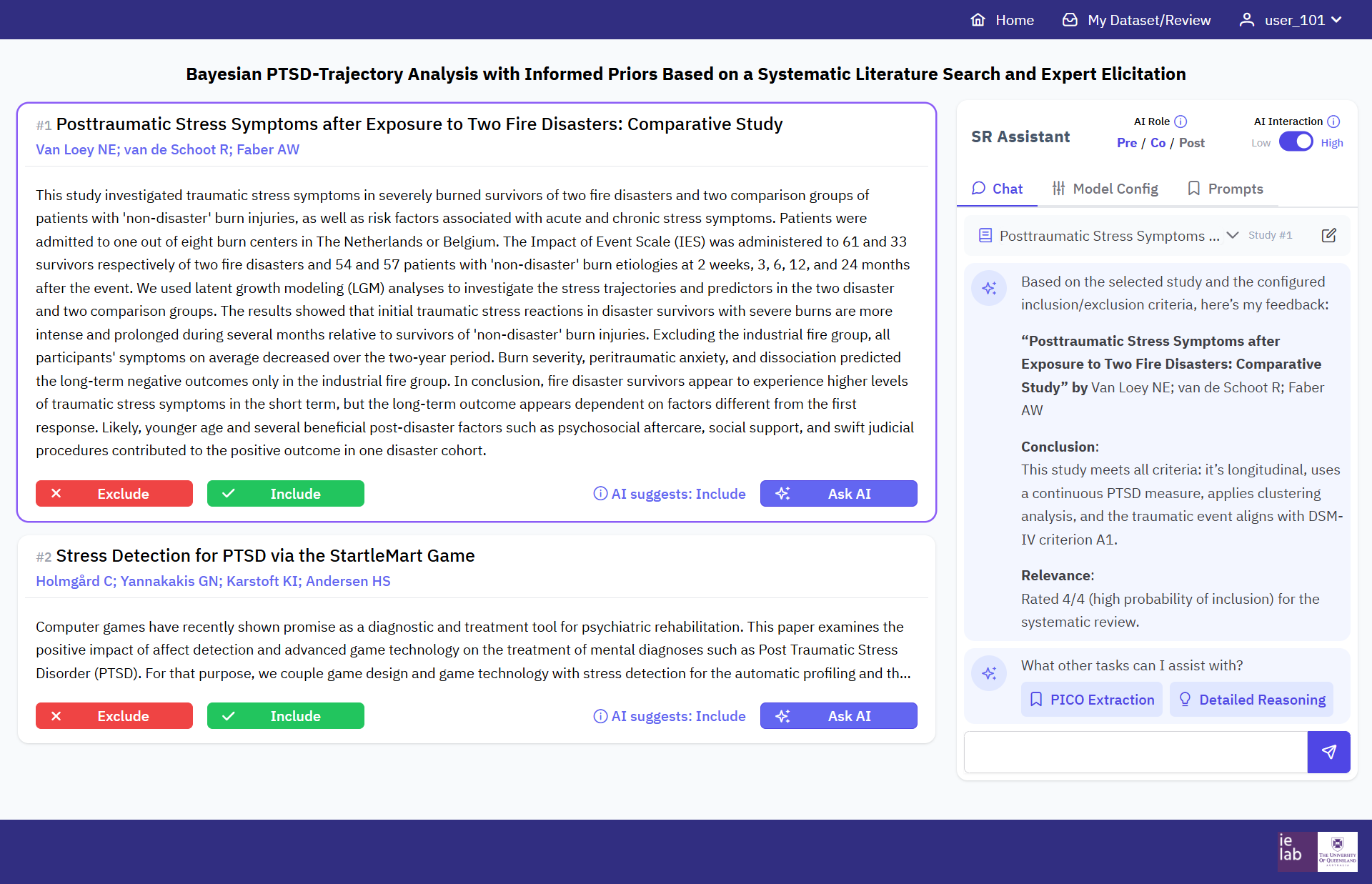

AiReview

AiReview is an open platform designed to accelerate systematic reviews (SRs) using large language models (LLMs). It provides an extensible framework and a web-based interface for LLM-assisted title and abstract screening. AiReview enables researchers to leverage LLMs transparently by offering different roles for AI involvement—pre-reviewer, co-reviewer, and post-reviewer—to support decision-making, live collaboration, and quality control. The tool integrates open-source and commercial LLMs, allowing users to customize screening criteria, interaction levels, and model settings. It aims to improve efficiency, transparency, and accessibility in SRs.